This post draws on a recent RFF discussion paper by RFF Senior Fellow Roger Cooke, where he explores these topics in greater detail. Cooke is the Chauncey Starr Chair in Risk Analysis at RFF and lead author for Risk and Uncertainty in the recently released IPCC Fifth Assessment Report.

Present State of the Uncertainty Narrative

In 2010, the US National Research Council (NRC) illustrated reasoning under uncertainty about climate change using the calibrated uncertainty language of the 2005 Intergovernmental Panel on Climate Change (IPCC) Fourth Assessment Report. The NRC report bases its first summary conclusion on “high confidence” (at least 8 out of 10) or “very high confidence” (at least 9 out of 10) in six (paraphrased) statements:

- Earth is warming.

- Most of the warming over the last several decades can be attributed to human activities.

- Natural climate variability ... cannot explain or offset the long-term warming trend.

- Global warming is closely associated with a broad spectrum of other changes.

- Human-induced climate change and its impacts will continue for many decades.

- The ultimate magnitude of climate change and the severity of its impacts depend strongly on the actions that human societies take to respond to these risks.

What is the confidence that all these statements hold? In the non-formalized natural language it is not even clear whether “all statements have a 0.8 chance of being true” means “each statement has a 0.8 chance of being true” or “there is a 0.8 chance that all statements are true.” Consider the second statement. Are the authors highly confident that “the earth is warming AND humans are responsible”, or are they highly confident that “GIVEN that the earth is warming, humans are responsible”? These are very different statements. Since the Earth's warming is asserted in the first statement, perhaps the second statement is meant. In that case, the likelihood of both statements holding is the product of their individual likelihoods. If the first two statements enjoy "high confidence", then both can hold with only "medium confidence".

Suppose the Nuclear Regulatory Commission licensed nuclear reactors based on the finding that each reactor's safety each year was “virtually certain” (99–100 percent probability). With 100 commercial nuclear reactors, each with a probability of 0.01 per year of a meltdown . . . well, do the math. That is the point: To reason under uncertainty you may have to do math. You can't do it by the seat of the pants. I am very highly confident that all above statements hold, but I am 100 percent certain that this way of messaging uncertainty in climate change won't help us get the uncertainty narrative right.

Back to the Past

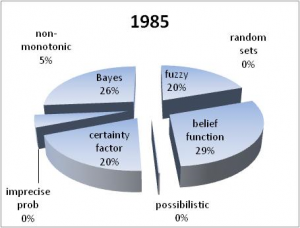

Figure 1. Word Fragment Counts in Uncertainty in Artificial Intelligence, 1985

In 1977 the artificial intelligence community launched a program to apply their computer chess skills to solving real world problems, in particular, reasoning under uncertainty in science. Studying the strategies and heuristics of “grand masters” of science, they concluded that the grand masters did not reason probabilistically, and explored “alternative representations of uncertainty,” including certainty factors, degrees of possibility, fuzzy sets, belief functions, random sets, imprecise probabilities, and nonmonotonic logic, among many others. Fuzzy sets are of interest because the reasoning with the IPCC's calibrated language seems to comply with the original fuzzy rule for propagating uncertainty: If the fuzzy uncertainty of "Quincy is a man" is equal to the fuzzy uncertainty of "Quincy is a woman" (i.e., 1/2), then the fuzzy uncertainty of "Quincy is a man AND a woman" is the minimum of these two, also 1/2. The NRC reasons as if high confidence in each of their six conclusions were sufficient to convey high confidence in all of them jointly. Maybe the problem of communicating uncertainty is that the communicators don’t understand uncertainty.

The proceedings of the premier conference “Uncertainty in Artificial Intelligence” have been digitized since 1985 and provide a unique record of the development of alternative representations of uncertainty. Figure 1 shows the relative word fragment count of various approaches in 1985. The largest component is “belief function,” followed by “Bayes,” “fuzzy,” and “certainty factor.” “Bayes,” a proxy for subjective probability, accounts for 26 percent of the total.

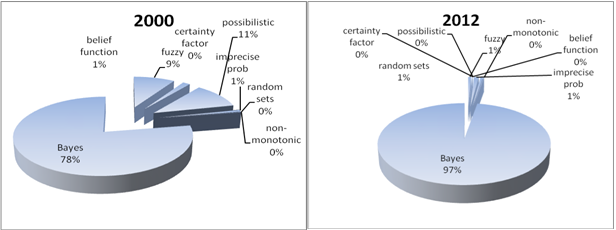

By 2000 the balance has shifted; "Bayes" now accounts for 79 percent of the count. In 2012, the count is 97 percent "Bayes."

Figure 2. Word Fragment Counts in Proceedings of Uncertainty in Artificial Intelligence, 2000 (left) and 2012 (right)

Climate change is the current theater of alternative uncertainties, and many of the discarded approaches are reappearing. Underlying this is the conviction that uncertainties in climate change are "Deep" or "Knightian" and therefore defy quantification in terms of probability.

A bandwagon of economists, not the least of whom is Sir Nicholas Stern, aver that where “we don’t know the probability distribution," then "Knightian uncertainty” kicks in, which cannot be characterized by probabilities. That's good news to modelers with squishy parameters. Bandwagons don't stop at libraries and proponents of Deep or Knightian uncertainty perhaps haven't read Knight: “We can also employ the terms ‘objective’ and ‘subjective’ probability to designate the risk and uncertainty respectively, as these expressions are already in general use with a signification akin to that proposed.” Knight, writing in 1921, did not know how to measure subjective probabilities. Neither did he know how to measure “risk” or objective probabilities. The Laplace interpretation of probability to which Knight’s definition of risk appeals, was moribund, if not dead, at the end of the nineteenth century. It was, after all, Richard von Mises who emphasized (originally in 1928) that objective probabilities can be measured as limiting relative frequencies of outcomes in a random sequence. Like many authors of this period, Knight appears to have been unaware of the role of (in)dependence assumptions and believed that objective probabilities are much more objective than modern probability warrants. The operationalization of partial belief as subjective probability awaited publication of philosopher Frank Ramsey's "Truth and Probability" in 1931 (published in this collection of essays). It is indeed significant that economists claiming that climate uncertainty cannot be described with probability harken back to a period when probability, both objective and subjective, was not well understood. However, Knight did anticipate the objective validation of expert subjective probabilities, which underpins science based uncertainty quantification.

Forward Is the Only Way Out

Climate deniers use uncertainty to shift the proof burden; alarmists use uncertainty to focus on the worst cases. Going back to 1921 isn’t the answer. The partial belief that Quincy is a woman is not equal to the partial belief that Quincy is a man AND a woman. I see no alternative to pushing forward with science-based uncertainty quantification: uncertain quantities are given operational meaning, and experts' subjective probabilities are quantified and tested as statistical hypotheses. Combinations of expert judgments are subject to empirical control to enable rational consensus. This has been going on for a long time, but is new for climate science. I described an example regarding ice sheet dynamics early last year.

Building rational consensus on uncertainty is not a mathematics problem, it’s an engineering problem and follows the laws of logic for partial belief. Once we learn how to define and measure performance in uncertainty quantification, we can start improving.